This technical post breaks down congestion control mechanisms, focusing on the role of the transport layer.

When multiple devices attempt to send an excessive volume of data simultaneously, the network can become overwhelmed.

This phenomenon, known as congestion, leads to performance degradation, causing packets to be delayed or even lost.

What Is Congestion Control and Where Does It Occur?

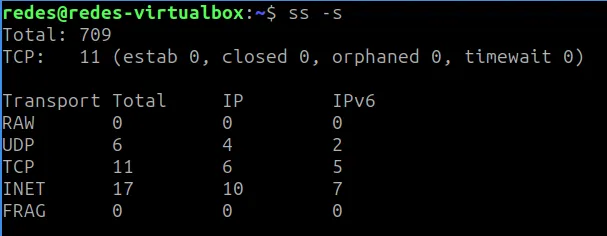

Congestion control is a shared responsibility between the network layer and the transport layer.

- Network Layer: This is where congestion is detected, as routers are the components that struggle with overflowing traffic and packet queues.

- Transport Layer: This is the source of the problem, as it’s the transport entities (protocols like TCP) that send the packets into the network.

Therefore, the most effective solution to mitigate congestion is to have the transport protocols reduce their packet sending rate.

The internet fundamentally relies on these mechanisms to maintain its stability and performance.

The Real Goal: Optimal Bandwidth Allocation

The purpose of congestion control extends beyond simply preventing network collapse. The primary objective is to achieve a bandwidth allocation that is simultaneously:

- Efficient: It utilizes all available network capacity.

- Fair: It distributes capacity equitably among competing entities.

- Adaptive: It responds quickly to changes in traffic demand.

An allocation that meets these criteria ensures high performance without overloading the infrastructure.

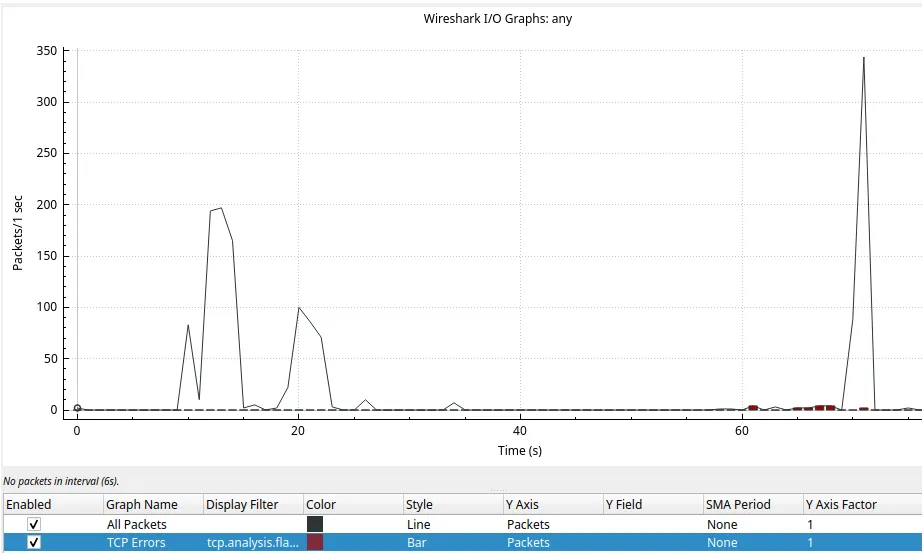

The Relationship Between Load, Goodput, and Delay

It is crucial to understand the dynamic between the load offered to the network and the resulting performance.

- Goodput (Effective Throughput): This refers to the rate of useful packets that successfully arrive at the destination. Initially,

goodputincreases linearly with the load. However, as the load approaches the link’s maximum capacity, the growth ofgoodputslows down due to packet accumulation in buffers and an increased probability of loss. - Delay: At low load, delay is relatively constant, representing the propagation time. As the load increases, delay grows exponentially, as packets spend more time waiting in router queues.

If a transport protocol is poorly designed and retransmits packets that were merely delayed (and not lost), the network can enter a state of congestion collapse.

In this scenario, transmitters are frequently sending packets, but the amount of useful data being delivered plummets.

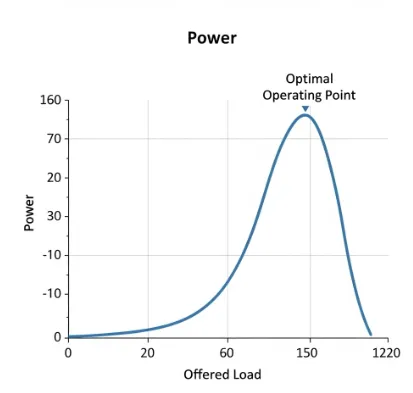

Maximizing Efficiency with the Power Metric

The point where performance begins to degrade is the “onset of congestion.” To operate the network efficiently, it is desirable to allocate bandwidth up to a point just before the sharp increase in delay that is, below the maximum theoretical capacity.

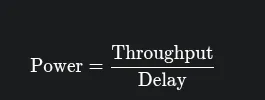

To identify this optimal point, Kleinrock (1979) proposed the Power metric, defined by the following relationship:

The Power of a connection increases with the load as long as the delay remains low. However, it reaches a peak and then begins to fall as the delay grows sharply.

The load that corresponds to the maximum Power represents the most efficient operating point for the network.

Conclusion: Operating at the Sweet Spot

In summary, congestion control at the transport layer is not just about preventing network collapse; it’s about finding a bandwidth allocation that maximizes efficiency.

By monitoring metrics like throughput and delay, and by using concepts like the Power metric, congestion control algorithms aim to keep the network operating at its peak performance point, ensuring fast and stable communication for all users.

see more:

Tutorial: How to use WHOIS and RDAP

https://datatracker.ietf.org/doc/html/rfc9293

Juliana Mascarenhas

Data Scientist and Master in Computer Modeling by LNCC.

Computer Engineer